Follow up on LLM foundations and Brain-DNA analogy

In my efforts to help you build convincing intuition about Large Language Models (LLMs) I mentioned in earlier posts two main scientific pillars that it is based on – Information Theory and Probability Theory. They help compressing the essence of knowledge on the one hand, and choosing from and correlating massive permutations of what words mean in different contexts on the other hand. Internet resources rarely if at all mention the Theory of Manifolds when helping build intuition about LLMs potency. In fact, the manifolds substrate of LLMs is what puts together all the bits and pieces of Generative AI intelligence. In my opinion it has absolutely a central place in LLM magic.

Why manifolds matter

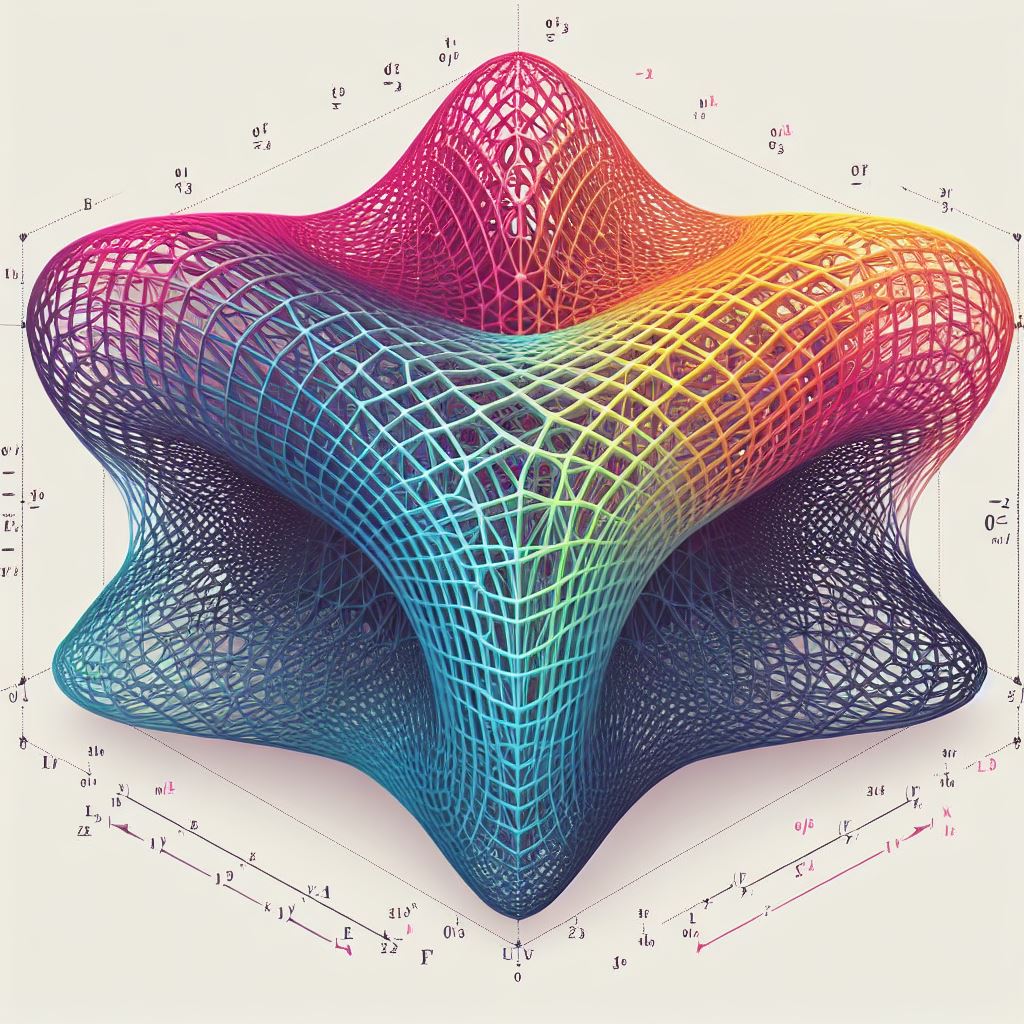

Theory of Manifolds is a bedrock of physics of particles and universe governance. In simplest terms think of smooth surfaces: planes, uphills and downhills, alleys. When trying to explain mechanics of elementary particles in physics or evolution of stars in the universe, the complex processes in multidimensional spaces somehow need to be projected to much simpler yet expressive frameworks. These frameworks operate in spaces with lower dimensions in order to be mathematically explained and reasoned about. That’s what Theory of Manifolds covers in nutshell: projecting complex physical processes onto much smaller dimensional space (surfaces) with local aspects in focus. These focus areas – charts – are cleverly stitched together comprising one big map.

Once patterns and trends reflective of real-world work are captured in manifolds in localized fashion, we are much more confident at inferring predictions and outcomes as they would apply to the original space of observation (that is re-translating back to the complex real world). The whole concept of hidden variables comes into play here. But for now I will spare you the hassle of going too deep into that subject. 🙂

Manifolds as substrate of LLM and DNN

Let’s see how manifolds relate to LLM and Deep Neural Network. It is finally time to talk about LLM parameters, and how manifolds integrate word embeddings and parameters. I have described in the earlier post word embeddings as codified versions of the words. They capture a range of the word meanings in different contexts. All in the form of real numbers vectors. A complex multidimensional map of very many manifold charts gets created (think of local projections of inference) as a result of LLM training . This humongous map in sum is characterized by tens to hundreds of billions of parameters.

From your early geometry days you perhaps remember that in order to describe a plane you would write ax + by = c where a, b and c are three parameters of a unique plane. Well, picture now that LLM is, loosely speaking, expressible by a similar but much more involved equation that has billions of a, b, c that characterize the DNN part of LLM. Bear with me, this is extremely important for building intuition of LLM magic.

Now, continue picturing that billions of parameters are the subtle “traffic” direction signs to infinite choices of words and potentially related contexts of knowledge that are encoded in the LLM map. The ultimate goal of training LLM is actually to build this massive map of manifolds (smooth planes, uphills, downhills, alleys) of world knowledge. During the inference phase this map is confidently navigable by all kinds of tasks – prompts asking for sentence completion or translation of the text, or sentiment analysis, you name it.

Guarantees of believable magic

Word embeddings, that includes capturing their relationships to each other in different contexts, are learned simultaneously with configuration of the knowledge map (parameters of LLM manifolds) by processing billions of articles with textual information during LLM training. This guarantees that any prompt made up from word sequence embeddings will organically lay over (or map) on this massive LLM world knowledge map. As a consequence the process of inference (i.e. continuous mapping of sequence) will lead to proper continuation of the prompt completion. In other words we are mapping prompts to planes, dips and alleys of world knowledge represented as a mathematical map of manifolds. Voila! There is no voodoo science here. It is purely based on the sheer beauty of math.

Remember the days when you hated Her Majesty Math? It is time to regain affinity toward her! 🙂

A little bit of math lingo

Mathematically speaking what we have here is a function (a map) that maps input text to output text. This function is described by DNN architecture (number of layers, neurons in each layer, activation functions etc) and its parameters. It has been proven mathematically that any real world mapping (function), no matter how complex, can be approximated with great degree of precision. In the case of LLM we are dealing with a humongous (hundreds of billions parameters) function that magically (yet mathematically soundly) maps some input of interest (e.g. prompts, sentences to translate to another language) to stunningly believable output.

Importance of manifolds notion

Why manifold underpinning of LLMs is particularly important? Well, we know that current LLMs suffer from hallucinations. They produce at times unreliable and irrelevant results. Localizing problematic areas of LLM functioning and fine-tuning them (i.e. updating specific segments of LLM parameters) is an important task. Manifolds intuition should help here. Manifold Theory has helped dissect complex physical phenomena like elementary particle movement in quantum mechanics. There is no reason why it should not be heavily utilized in order to make LLMs safe and aligned with human values: understandable, manageable, tailorable with surgical precision to designated goals. Not in a relatively brute force and opportunistic way as it happens in today’s fine-tuning processes of LLMs.

We will talk more about manifold role in next posts dedicated to LLM training and inference. Onward!

Leave a Reply